Hello,

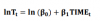

I would like some further clarification on the log-linear function that appears on page 5 of the Ch.11 materials:

GARP's Ch.11 materials writes the formula differently so i'm having some trouble reconciling. Forgive me if this should be something basic or intuitive (I don't remember much of high school math now) but in GARP's material, there is no "ln" in front of "δ0"in the formula that is shown next to 11.4 on p.189.

are these two formulas supposed to be equivalent? What difference does it make if the "ln" is on the left side of the equation, or on both sides?

Thank you

I would like some further clarification on the log-linear function that appears on page 5 of the Ch.11 materials:

GARP's Ch.11 materials writes the formula differently so i'm having some trouble reconciling. Forgive me if this should be something basic or intuitive (I don't remember much of high school math now) but in GARP's material, there is no "ln" in front of "δ0"in the formula that is shown next to 11.4 on p.189.

are these two formulas supposed to be equivalent? What difference does it make if the "ln" is on the left side of the equation, or on both sides?

Thank you