Hi David:

On your webnair: 2010-4[1].a-Valuation, page 18:

The WCS assumes the firm increases its level of investment when gains are realized; i.e., that the firm is “capital efficient.”

I couldn't clearly understand the point.

I excerpt the book PUTTING VaR TO WORK, page 113:

First, our analysis was developed in the context of a specific model

of the firm’s investment behavior; that is, we assumed that the firm,

in order to remain “capital efficient,” increases the level of investment

when gains are realized. There are alternative models of investment

behaviour, which suggest other aspects of the distribution of returns

should be investigated. For example, we might be interested in the

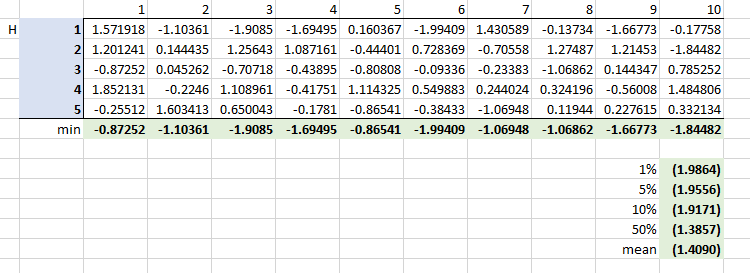

distribution of “bad runs,” corresponding to partial sums of length J

periods for a given horizon of H.

Hope you can help me understand it!

Thanks!

On your webnair: 2010-4[1].a-Valuation, page 18:

The WCS assumes the firm increases its level of investment when gains are realized; i.e., that the firm is “capital efficient.”

I couldn't clearly understand the point.

I excerpt the book PUTTING VaR TO WORK, page 113:

First, our analysis was developed in the context of a specific model

of the firm’s investment behavior; that is, we assumed that the firm,

in order to remain “capital efficient,” increases the level of investment

when gains are realized. There are alternative models of investment

behaviour, which suggest other aspects of the distribution of returns

should be investigated. For example, we might be interested in the

distribution of “bad runs,” corresponding to partial sums of length J

periods for a given horizon of H.

Hope you can help me understand it!

Thanks!