cabrown085

New Member

Apologies in advance for any lack of precision, clearly my background is not in math.

From what I read, variance is defined as two separate formulas:

I believe I understand the first part of the equation:

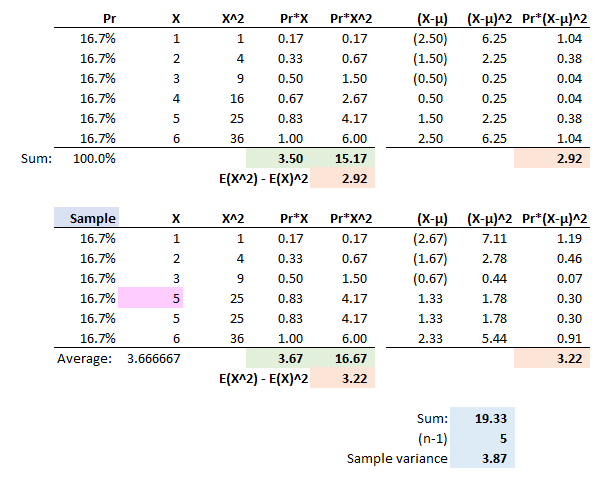

Var(X) = E[(X-u)^2]

where u = E(X):

(1) This means take the summation of: the actual values (X) MULTIPLIED by their assorted probabilities to arrive at u.

where X = is equal to each actual value.

(2) You take the series of (X - u)^2.

(3) You multiply each value of (X-u)^2 by X's assorted probability

(4) Add the total series in step 3 together.

However, Var(X) is also defined as:

Var(X)=E(X^2)-[E(X)]^2

I've been trying to wrap head around this formula:

(1) E(X^2): You take each value of X, THEN square it, and THEN multiply by the assorted probability.

(2) [E(X)]^2 I believe that this is the expected value of X (e.g., X*probability), THEN squared. Is this value the same as u and will it always be the same no matter how many Xs?

(3) You than take the difference of steps 1 and 2 for each value of X.

(4) You add the differences together to arrive at variance.

One of my questions is one of the two formulas above preferred or considered faster or more accurate?

Covariance is defined as:

Cov(X,Y)=E{[X-E(X)]*[Y-E(Y)]}

(1) E(X): Similar to above, this means take the summation of: the actual values (X) MULTIPLIED by their assorted probabilities to arrive at u or E(X). There should only be 1 E(X) value correct?

(2) E(Y): Similar to above, this means take the summation of: the actual values (Y) MULTIPLIED by their assorted probabilities to arrive at u E(Y). There should only be 1 E(Y) value correct?

(3) You add up the sum of the series of values and that gives you

Covariance is also defined as:

Cov(X,Y)=E(X,Y)-E(X)*E(Y)

Are E(X) and E(Y) the same for every instance?

Again, like my question for covariance, is one of the two formulas above preferred or considered faster or more accurate?

From what I read, variance is defined as two separate formulas:

I believe I understand the first part of the equation:

Var(X) = E[(X-u)^2]

where u = E(X):

(1) This means take the summation of: the actual values (X) MULTIPLIED by their assorted probabilities to arrive at u.

where X = is equal to each actual value.

(2) You take the series of (X - u)^2.

(3) You multiply each value of (X-u)^2 by X's assorted probability

(4) Add the total series in step 3 together.

However, Var(X) is also defined as:

Var(X)=E(X^2)-[E(X)]^2

I've been trying to wrap head around this formula:

(1) E(X^2): You take each value of X, THEN square it, and THEN multiply by the assorted probability.

(2) [E(X)]^2 I believe that this is the expected value of X (e.g., X*probability), THEN squared. Is this value the same as u and will it always be the same no matter how many Xs?

(3) You than take the difference of steps 1 and 2 for each value of X.

(4) You add the differences together to arrive at variance.

One of my questions is one of the two formulas above preferred or considered faster or more accurate?

Covariance is defined as:

Cov(X,Y)=E{[X-E(X)]*[Y-E(Y)]}

(1) E(X): Similar to above, this means take the summation of: the actual values (X) MULTIPLIED by their assorted probabilities to arrive at u or E(X). There should only be 1 E(X) value correct?

(2) E(Y): Similar to above, this means take the summation of: the actual values (Y) MULTIPLIED by their assorted probabilities to arrive at u E(Y). There should only be 1 E(Y) value correct?

(3) You add up the sum of the series of values and that gives you

Covariance is also defined as:

Cov(X,Y)=E(X,Y)-E(X)*E(Y)

Are E(X) and E(Y) the same for every instance?

Again, like my question for covariance, is one of the two formulas above preferred or considered faster or more accurate?